【英文原版】StableDiffusion3技术报告

AI智能总结

AI智能总结

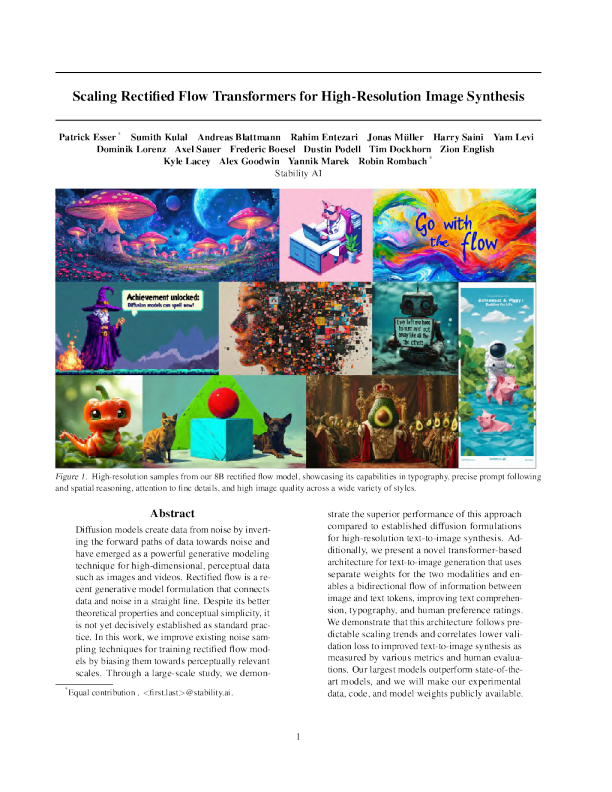

Patrick Esser*Sumith KulalAndreas Blattmann Rahim Entezari Jonas M ¨uller Harry Saini Yam LeviDominik LorenzAxel Sauer Frederic Boesel Dustin Podell Tim Dockhorn Zion EnglishKyle LaceyAlex Goodwin Yannik Marek Robin Rombach*Stability AIPatrick Esser*Sumith Kulal Andreas Blattmann Rahim Entezari Jonas Miller Harry Saini Yam LeviDominik Lorenz Axel Sauer Frederic Boesel Dustin Podell Tim Dockhorn Zion EnglishKyle Lacey Alex Goodwin Yannik Marek Robin Rombach*Stability AI AbstractAbstract strate the superior performance of this approachcompared to established diffusion formulationsfor high-resolution text-to-image synthesis. Ad-ditionally, we present a novel transformer-basedarchitecture for text-to-image generation that usesseparate weights for the two modalities and en-ables a bidirectional flow of information betweenimage and text tokens, improving text comprehen-sion, typography, and human preference ratings.We demonstrate that this architecture follows pre-dictable scaling trends and correlates lower vali-dation loss to improved text-to-image synthesis asmeasured by various metrics and human evalua-tions. Our largest models outperform state-of-the-art models, and we will make our experimentaldata, code, and model weights publicly available.strate the superior performance of this approachcompared to established diffusion formulationsfor high-resolution text-to-image synthesis, Additionally, we present a novel transfommer-basedarchitecture for text-to-image generation that usesseparate weights for the two modalities and en-ables a bidirectional flow of information betweenimage and text tokens, improving text comprehen-sion, typography, and human preference ratings.We demonstrate that this architecture follows predictable scaling trends and correlates lower vali-dation loss to improved text-to-image synthesis asmeasured by various metrics and human evalua-tions. Our largest models outperform state-of-theart models, and we will make our experimentaldata, code, and model weights publicly available. Diffusion models create data from noise by invert-ing the forward paths of data towards noise andhave emerged as a powerful generative modelingtechnique for high-dimensional, perceptual datasuch as images and videos. Rectified flow is a re-cent generative model formulation that connectsdata and noise in a straight line. Despite its bettertheoretical properties and conceptual simplicity, itis not yet decisively established as standard prac-tice. In this work, we improve existing noise sam-pling techniques for training rectified flow mod-els by biasing them towards perceptually relevantscales. Through a large-scale study, we demon-Diffusion models create data from noise by invert-ing the forward paths of data towards noise andhave emerged as a powerful generative modelingtechnique for high-dimensional, pereceptual datasuch as images and videos., Rectified flow is a re-cent generative model formulation that connectsdata and noise in a straight line, Despite its bettertheoretical properties and conceptual simplicity, itis not yet decisively established as standard prac-tice, In this work, we improve existing noise sam-pling techniques for training rectified flow models by biasing them towards perceptually relevantscales. Through a large-scale study, we demon- 1. Introduction1. Introduction into the model (e.g., via cross-attention (Vaswani et al.,2017; Rombach et al., 2022)), is not ideal, and presenta new architecture that incorporates learnable streams forboth image and text tokens, which enables a two-way flowof information between them. We combine this with ourimproved rectified flow formulation and investigate its scala-bility. We demonstrate a predictable scaling trend in the val-idation loss and show that a lower validation loss correlatesstrongly with improved automatic and human evaluations.into the model (e.g., via cross-attention (Vaswani et al.,2017; Rombach et al., 2022), is not ideal, and presenta new architecture that incorporates learnable streams forboth image and text tokens, which enables a two-way flowof information between them. We combine this with ourimproved rectified flow formulation and investigate its scala-bility. We demonstrate a predictable scaling trend in the val-idation loss and show that a lower validation loss correlatesstrongly with improved automatic and human evaluations. Diffusion models create data from noise (Song et al., 2020).They are trained to invert forward paths of data towardsrandom noise and, thus, in conjunction with approximationand generalization properties of neural networks, can beused to generate new data points that are not present inthe training data but follow the distribution of the trainingdata (Sohl-Dickstein et al., 2015; Song & Ermon, 2020).This generative modeling technique has proven to be veryeffective for modeling high-dimensional, perceptual datasuch as images (Ho et al., 2020). In recent years, diffusionmodels have become the de-facto approach fo